Caching is a crucial technique used in modern computing to improve system performance and reduce latency. It involves storing frequently accessed data closer to the users, reducing the need to retrieve the same data repeatedly from the original source.

Centralized Cache Vs Distributed Cache

Caching can be implemented in different ways, depending on the architecture and requirements of the system. Two common approaches are Centralized Cache and Distributed Cache.

Centralized Cache

In a Centralized Cache setup, a single cache instance is shared among all the nodes or services in the system. This means that whenever any node needs to access cached data, it sends a request to the centralized cache. If the requested data is present in the cache, it is quickly returned, reducing the need to access the original data source. Centralized Cache simplifies cache management as there is only one cache to update, but it can become a bottleneck in high-traffic scenarios.

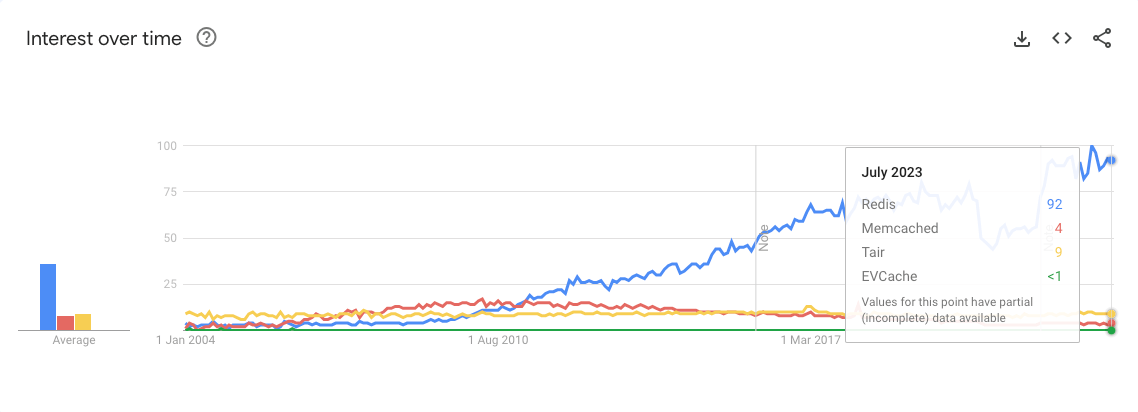

There are varies centralized cache solutions, Redis is the most popular one.

Redis Vs Memcached Vs Alibaba/Tair Vs NetFlix/EVCache

Source: Google Trends

Distributed Cache

Distributed Cache, on the other hand, involves multiple cache instances distributed across various nodes or services in the system. Each node maintains its local cache, and data is distributed among these caches based on a hashing or partitioning mechanism. Distributed caching offers better scalability and load balancing since the cache workload is distributed across multiple nodes. It also reduces the chances of a single point of failure, enhancing system reliability. However, managing consistency and synchronization across distributed caches can be more complex compared to a centralized approach.

Ehcache and Google Guava Cache are 2 most popular local cache solution, today. Because of large scale, our system is using Consistent Hashing + Ehcache, so, given an ehcache.xml example:

1 | <cache |

Soft TTL Vs Hard TTL

Soft TTL

In Soft TTL, cached data is considered valid even after its TTL expires. However, when a client requests the stale data, the cache may serve it with a notification indicating that the data has expired. The cache update thread will then asynchronously fetch the updated data from the original source and refresh the cache. Soft TTL provides a smoother user experience, as it avoids serving outdated data and minimizes cache misses. It’s suitable for the scenario which doesn’t require strong consistency.

Hard TTL

In Hard TTL, cached data becomes invalid as soon as its TTL expires. When a client requests the data after its expiration, the cache returns a cache miss, and the client needs to retrieve the updated data from the original source. Hard TTL ensures data freshness but may result in more cache misses, so in practice, we normally set a Soft TTL before Hard TTL to balance between Latency and consistency.

Negative Cache

Negative Cache is important to prevent system overload by exceptions. our system had a scenario that an access deny exception happen when a single customer has large fanout, the downstream service experienced connection exhaustion because upstream service didn’t cache access deny exception, and keep sending requests to down stream service. By Adding Negative cache, downstream service was protected, however, to avoid prolonged errors for the customer, we kept the Negative Cache TTL short (1 second).